🗿Mythological Storyteller

- Fine-tuned GPT-Neo 125M using a custom training pipeline that integrated structured mythological metadata with narrative text.

- Employed data augmentation techniques and a sliding-window approach to expand limited Kaggle datasets.

Hosted this model on HuggingFace hub, utilizing PyTorch, Transformers, and mixed-precision (fp16) training to ensure efficient resource utilization and scalable deployment.

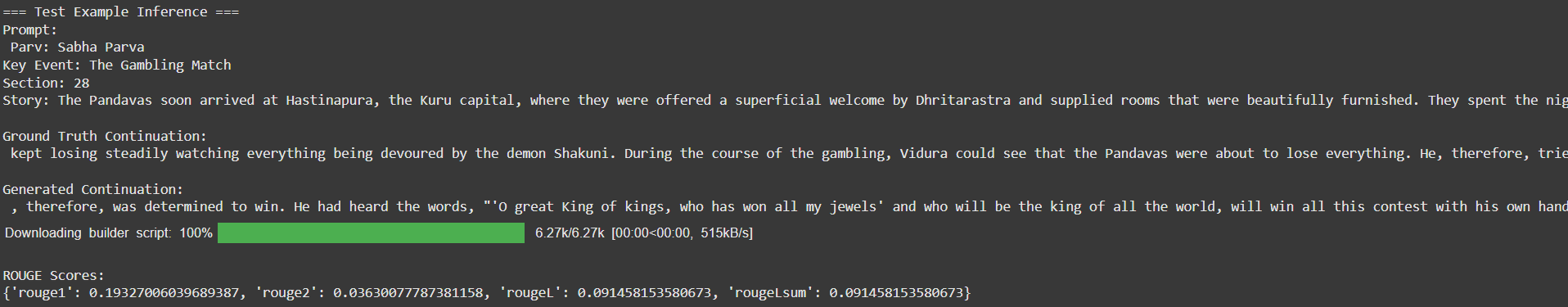

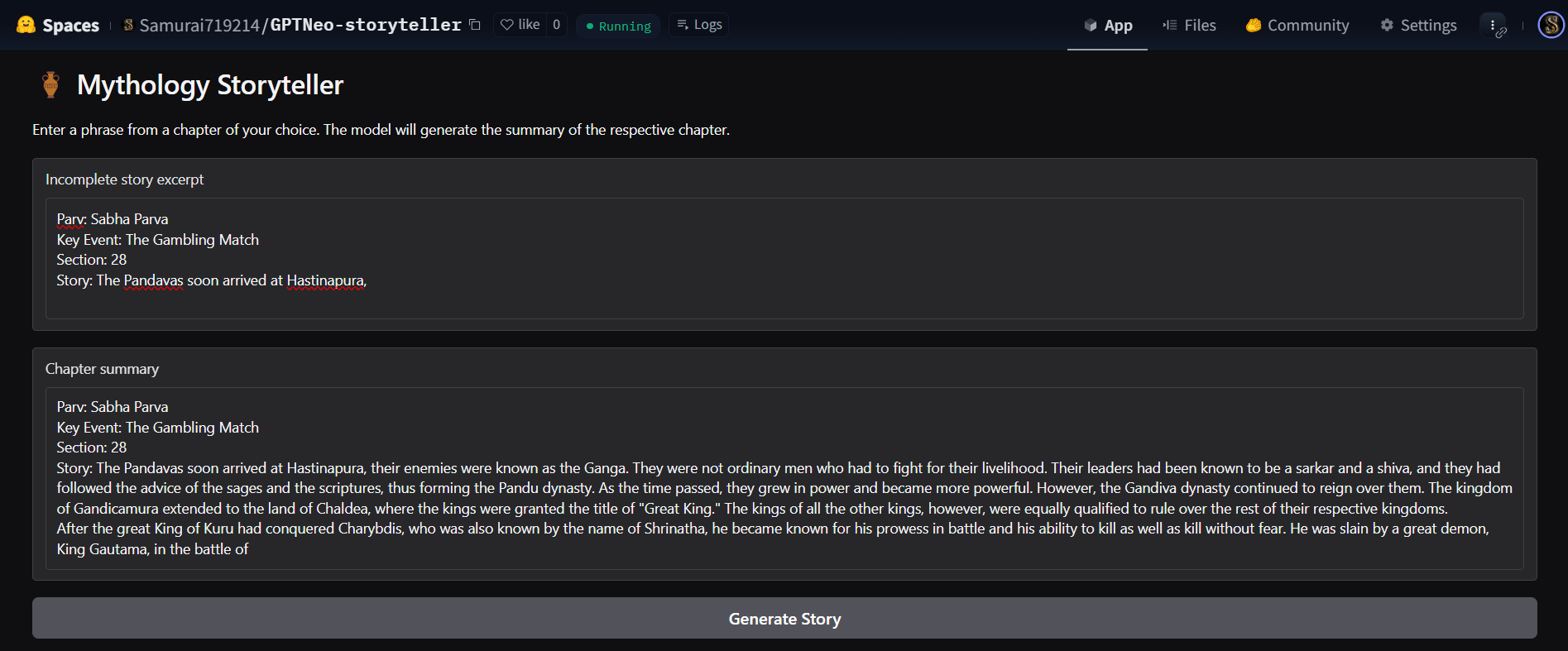

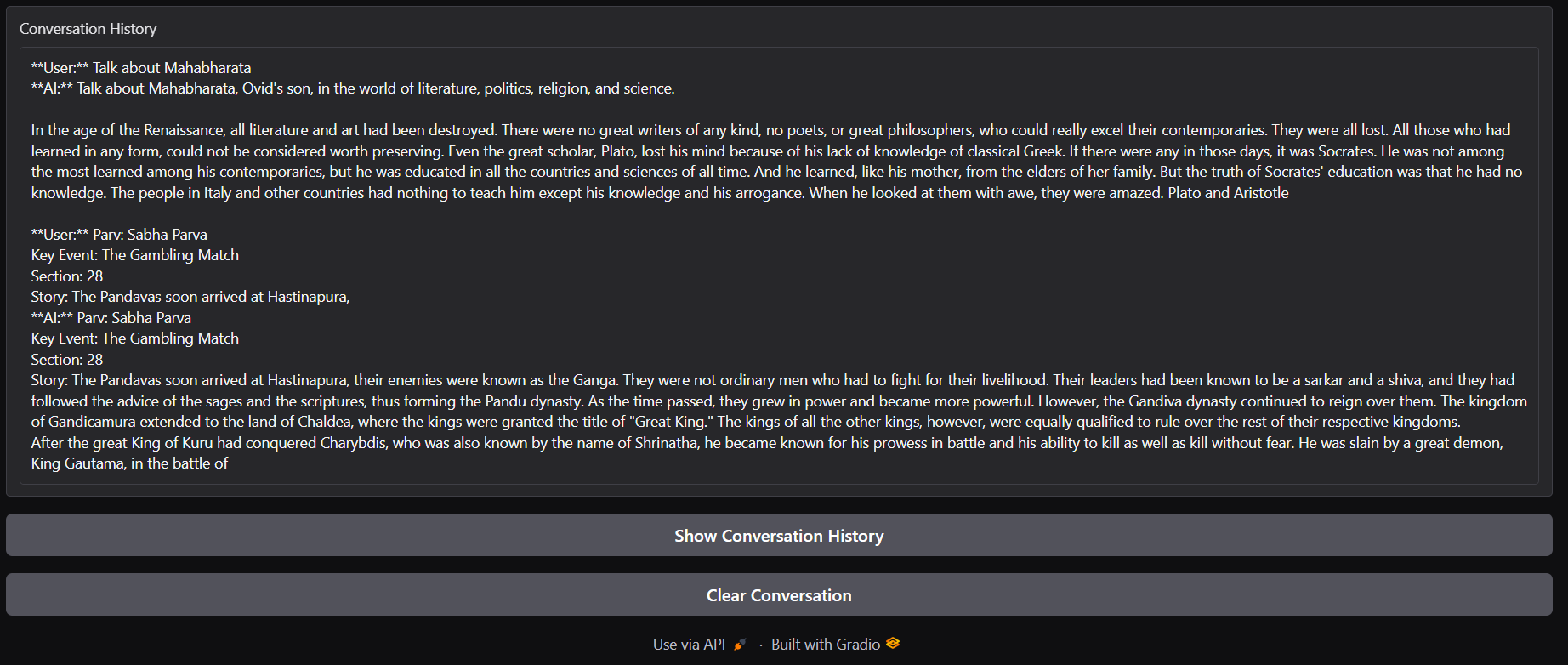

Deployed an interactive Gradio web app that accepts incomplete story excerpts, automatically prepends contextual instructions, and leverages sampling-based inference (with parameters like temperature, top-p, and no-repeat n-gram constraints) for creative text generation.

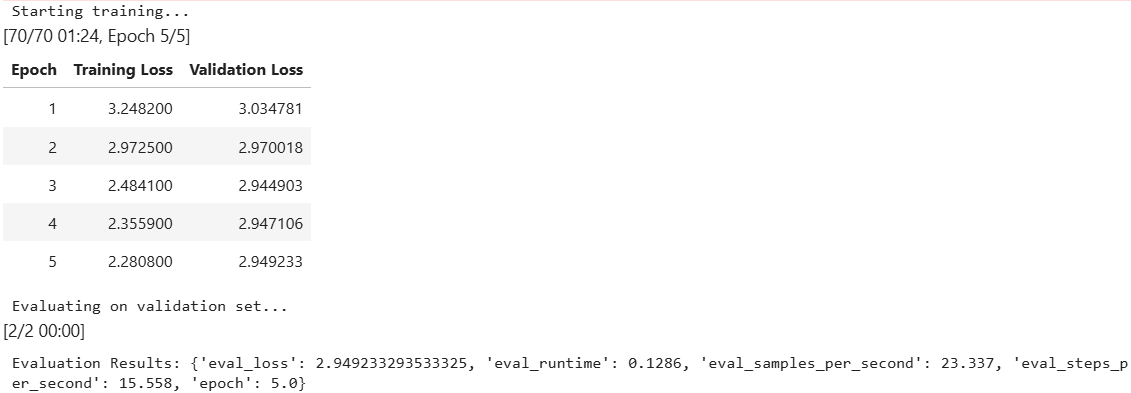

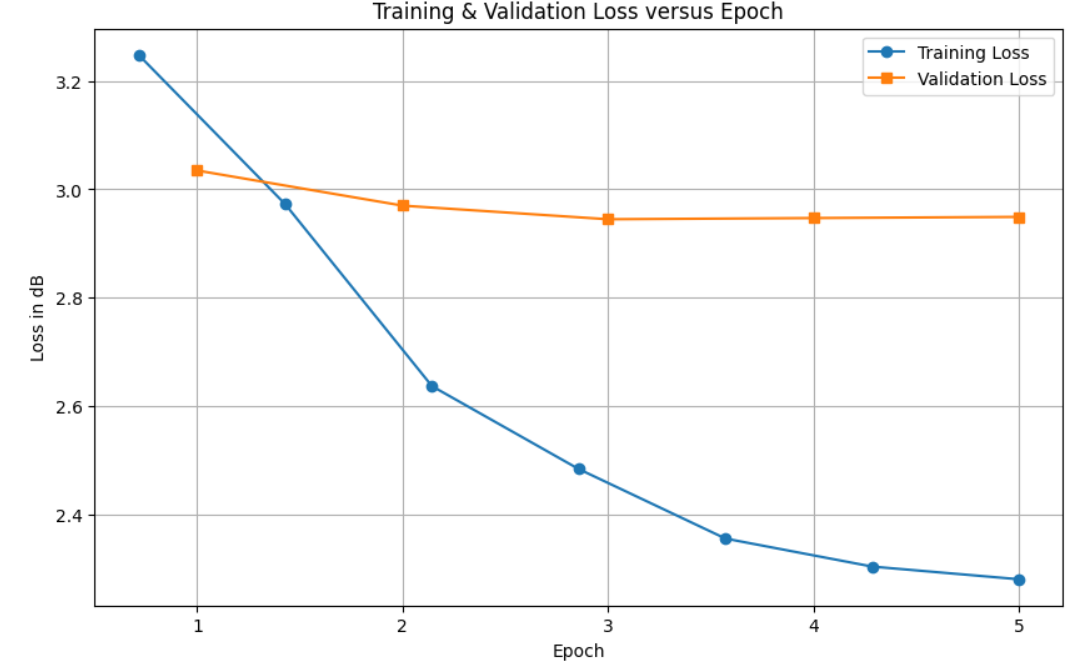

- Evaluated model performance using quantitative metrics such as ROUGE score and fine-tuning hyperparameters (learning rate, batch size, warmup steps) to balance overfitting and generalization.