❓FAQ Generator

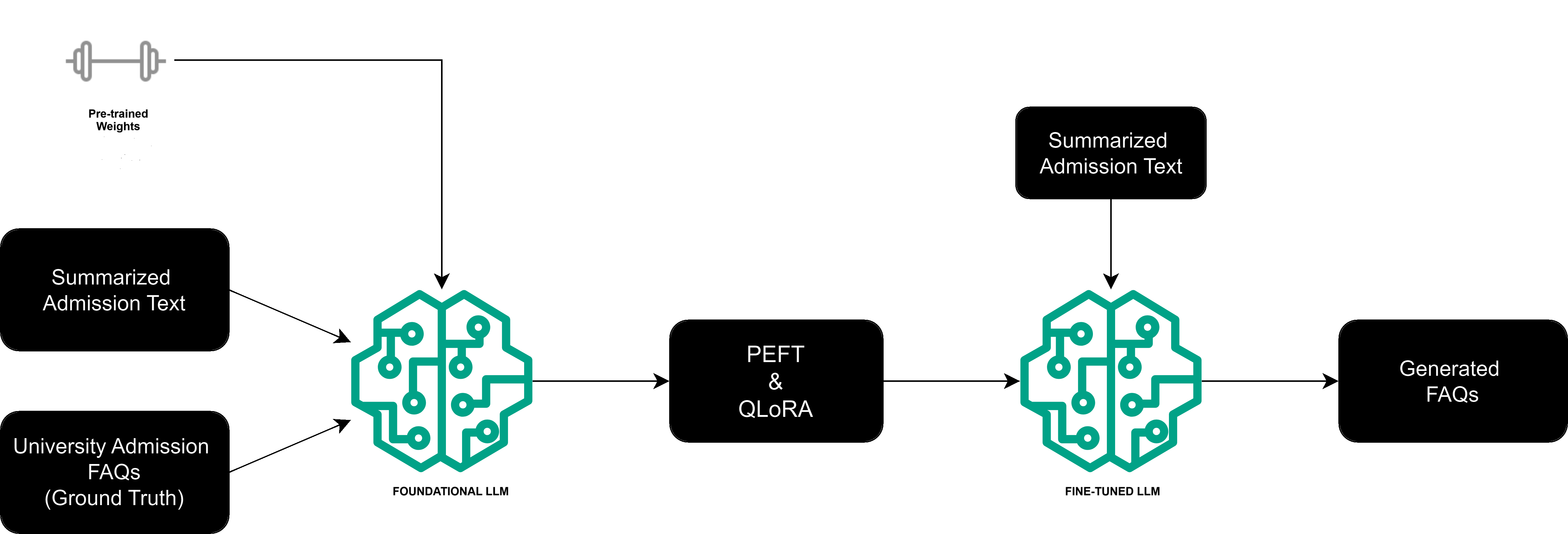

- Fine-tuned (supervised instruction) LLaMA-3 8b, LLaMA-2 7b, Mistral 7b, T5, and BART to generate FAQs based on the website’s content. HuggingFace API calls were made to load the models, and NLTK tokenizer was used.

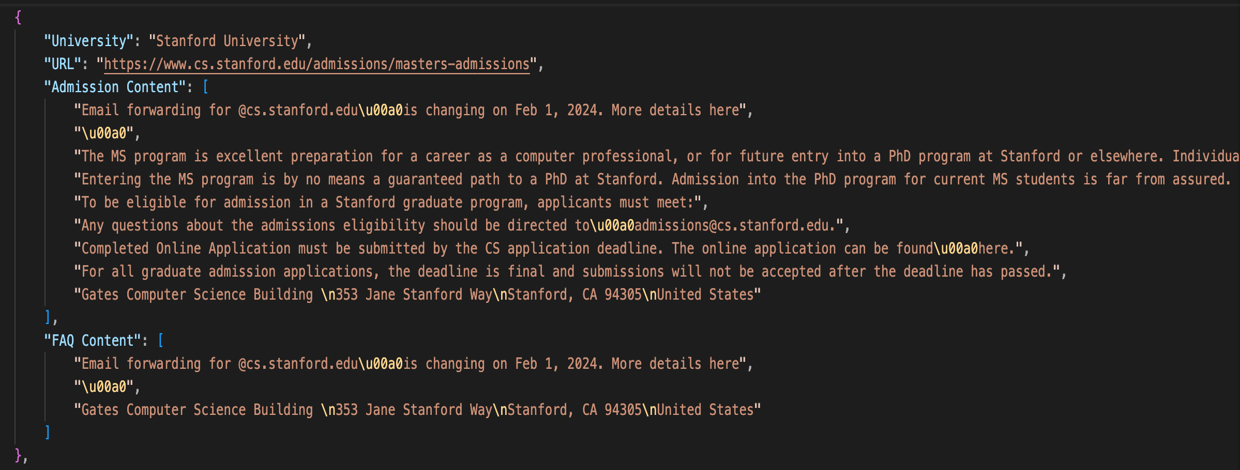

- Scrapped (Beautiful Soup) and stored the top 150 US universities’ MS in CS graduate admission requirements in a JSON file (dataset creation).

- Performed QLoRA PEFT on LLaMA-3 and LLaMA-2 to enhance the quality of the generated FAQs.

- Achieved a BERT Score of 0.8, outperforming the baseline T5 transformer with a 50% increase in accuracy and relevance of generated FAQs.